Overview

This project focused on improving the accuracy and adaptability of Automatic Speech Recognition (ASR) systems, particularly in dynamic and noisy environments. Leveraging advanced Dynamic Attention Mechanism and Adaptive Knowledge Distillation techniques, the project aimed to enhance speech recognition for real-time applications in cyber-physical systems. The primary objective was to create a model that could adapt to varying acoustic environments without requiring extensive retraining, making it suitable for deployment on resource-constrained devices. Two distinct deep learning models were employed, each addressing specific challenges in speech recognition under noise.

Core Challenges and Approach

One of the significant challenges was ensuring robust speech recognition in unpredictable acoustic conditions, which demanded adaptability and efficient computational performance. The traditional approach of training large ASR models is not well-suited for real-time applications in dynamic environments or edge devices, where resources such as memory and computation are limited. This project addressed these issues by combining the power of large models with lightweight, adaptable models through knowledge distillation and dynamically adjusted attention mechanisms.

The project explored two models:

Model 1 (CNN-GRU Hybrid Architecture):

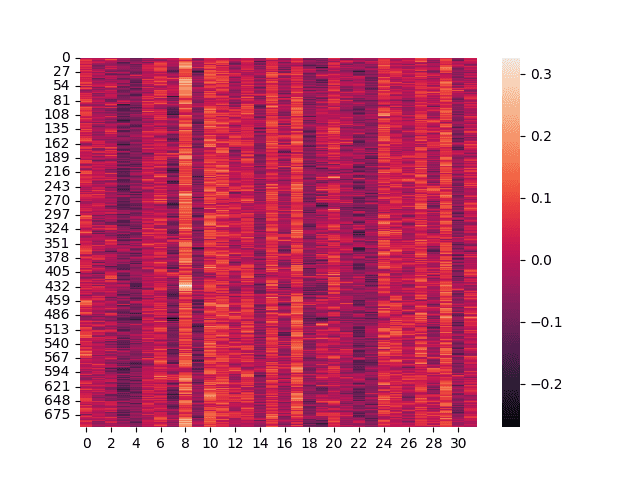

This model was based on a Convolutional Neural Network (CNN) and Gated Recurrent Unit (GRU) hybrid with residual connections, allowing it to capture both spatial and temporal features of the audio data. The model processed audio sequences bidirectionally to improve the capture of long-range dependencies in speech signals. The CNN layers were responsible for extracting high-level acoustic features, while the GRU layers modeled temporal dependencies in the speech data. The model was trained on the LibriSpeech dataset, a widely used benchmark for speech recognition.Model 2 (Wav2Vec 2.0):

The second model utilized Wav2Vec 2.0, a state-of-the-art pre-trained model for speech recognition. This model leveraged Transformer-based architectures to perform end-to-end speech recognition, effectively handling large variations in audio input. Wav2Vec 2.0 improved Automatic Speech Recognition (ASR) performance by directly learning representations from raw audio data, bypassing the need for handcrafted features like MFCCs (Mel-frequency cepstral coefficients). This model was particularly effective in noisy environments due to its pre-training on large-scale datasets.

Key Components:

Adaptive Knowledge Distillation:

The project employed a teacher-student framework where the large pre-trained models (such as Wav2Vec 2.0) acted as teachers. Knowledge was distilled from these large models into smaller, more efficient models (students) without significant loss in performance. This allowed the student models to mimic the predictions of the larger models, maintaining high accuracy even with reduced computational resources. The distillation process ensured that the student models were capable of recognizing speech in both clean and noisy environments.Dynamic Attention Mechanism:

A core innovation of the project was the development of a Dynamic Attention Mechanism that adjusted attention weights based on the acoustic environment. Unlike static attention mechanisms, which apply a fixed attention distribution across all inputs, the dynamic mechanism monitored noise levels and adapted the attention distribution accordingly. This allowed the model to focus on more relevant segments of the audio signal, improving recognition accuracy in noisy settings.

Key Features

Multi-Model Approach:

The integration of both CNN-GRU hybrid and Transformer-based architectures provided flexibility in deployment. The CNN-GRU model was optimized for edge devices with limited resources, while the Wav2Vec 2.0 model offered higher accuracy in more complex or resource-rich environments.Knowledge Distillation for Edge Devices:

By distilling knowledge from large models into smaller models, the project enabled efficient deployment on edge devices without significant loss in accuracy. This process allowed resource-constrained devices to benefit from the advancements of larger models like Wav2Vec 2.0.Real-Time Adaptability:

The dynamic attention mechanism allowed the models to adjust their internal focus in real time based on the noise level of the input audio. This adaptability ensured robust speech recognition performance even under fluctuating acoustic conditions, such as in noisy public spaces or environments with overlapping speech.

Outcome

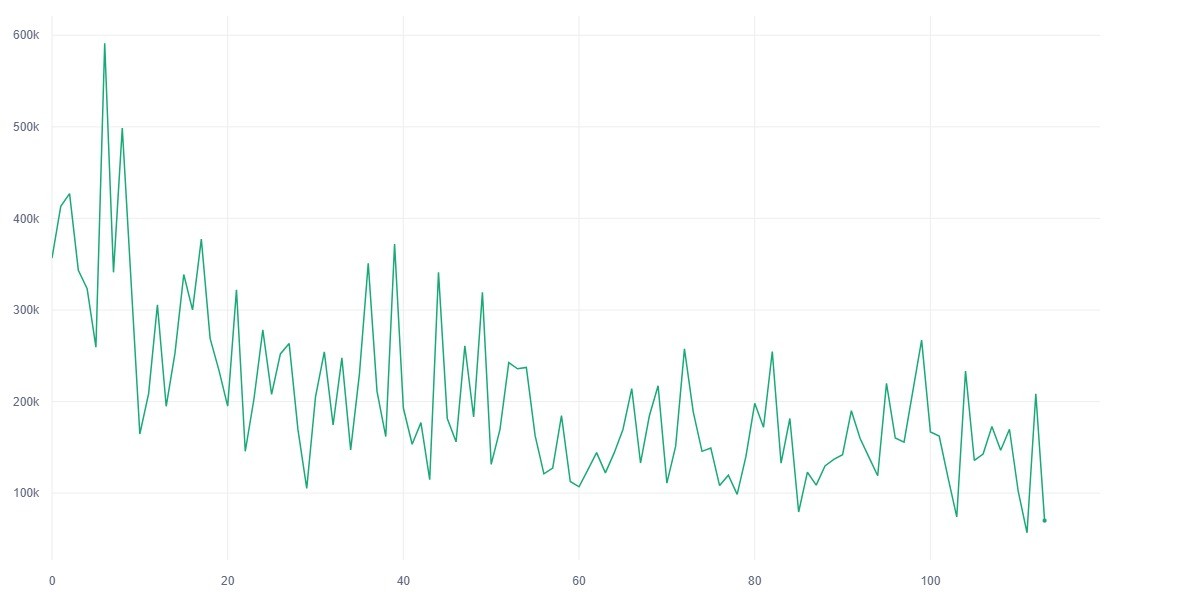

The project successfully demonstrated a significant reduction in Word Error Rate (WER) and Character Error Rate (CER), particularly in noisy environments. The CNN-GRU hybrid model offered high performance on edge devices, with an optimized balance between accuracy and computational efficiency. The Wav2Vec 2.0 model showed improved performance in complex environments with variable noise levels, making it ideal for real-time applications in cyber-physical systems. The integration of Dynamic Attention Mechanism and Adaptive Knowledge Distillation allowed for real-time adaptation to environmental changes, ensuring high accuracy while minimizing resource consumption.

The use of dynamic attention enhanced the system’s robustness, ensuring that the models could adapt to new conditions without retraining. This made the solution particularly useful for real-world deployment in edge computing scenarios, where resources are constrained, and noise conditions are unpredictable.