Overview:

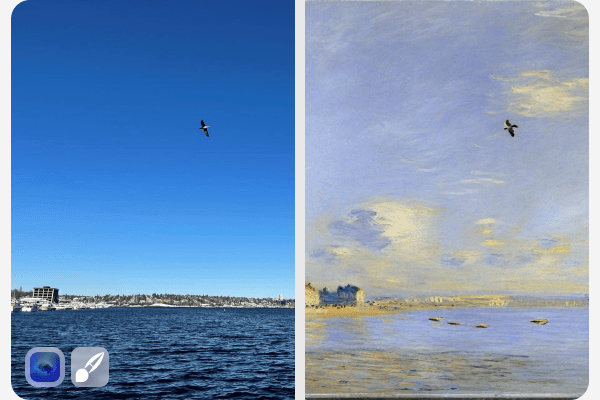

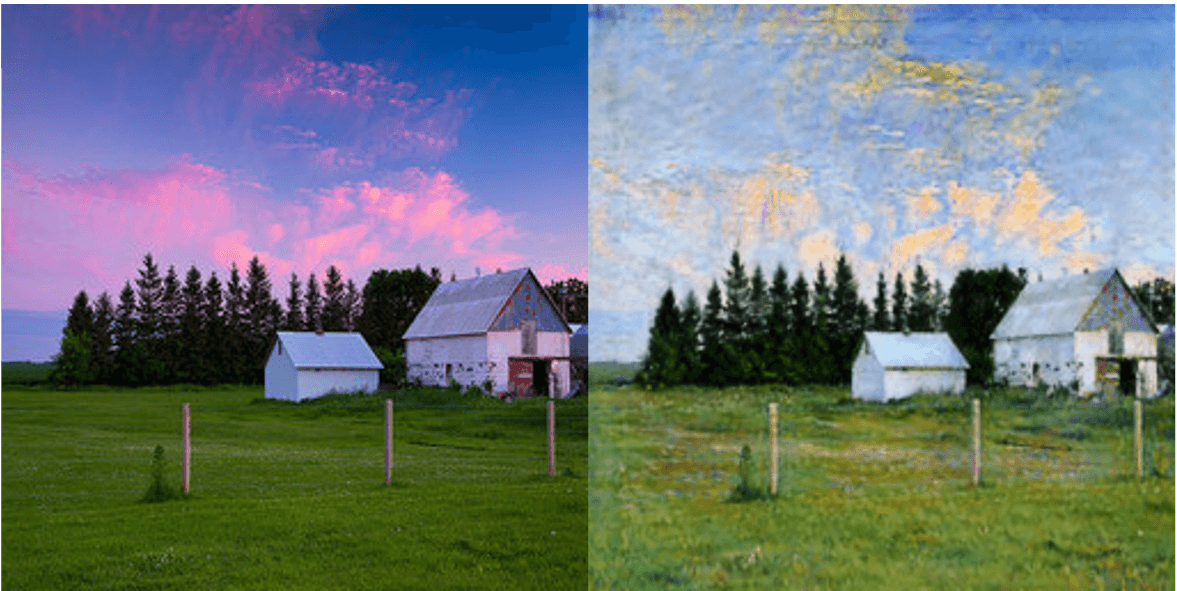

AI Brush is a cutting-edge deep learning project aimed at creating high-quality artistic transformations of landscape photographs into the iconic painting style of Claude Monet. The project explores multiple AI-driven approaches to style transfer, enabling users to convert ordinary photos into Monet-like masterpieces. This project investigates unpaired image translation through three distinct models, each leveraging advanced neural networks and machine learning techniques to achieve accurate style replication. In addition to the technical development, the project also conducted user surveys to evaluate the aesthetic appeal and authenticity of the AI-generated art compared to genuine Monet paintings.

Core Challenges and Approach:

Replicating Monet’s artistic style presents several challenges, including his signature use of loose brushwork, soft contours, and an intricate interplay of light and color. Traditional style transfer methods struggle with maintaining both artistic integrity and content preservation when transforming images into impressionistic art. To address these challenges, the project employed three unique approaches:

CycleGAN (Generative Adversarial Networks):

CycleGAN enables the model to perform style transfer between two domains (Photographs and Monet paintings) without requiring paired datasets. The system uses two generators and two discriminators to translate images between the two domains and determine if the output resembles Monet’s style.

Cycle-consistency loss ensures that the original content is preserved during the transformation, allowing reversible translation. The discriminators help differentiate between real Monet paintings and generated ones, driving the model to generate more realistic artistic renditions.

Diffusion Models:

Fine-tuning Stable Diffusion models with LoRA (Low-Rank Adaptation), this method allows for precise control over structure guidance and content extraction. ControlNet is used to provide detailed guidance on the structure of the input images, ensuring that the transformed image retains critical landscape details.

CLIP (Contrastive Language-Image Pretraining) and GPT are employed to generate content prompts, providing contextual information for fine-tuning the model based on the desired artistic characteristics, such as lighting and brushstroke style.

Stylized Neural Painting:

Using the VGG19 model, this method extracts high-level image features and performs a style transfer by combining style loss (based on the Gram matrices of the target style image) with pixel-level loss (L1 loss) and optimal transportation loss to enhance visual coherence. This method focuses on improving the spatial alignment between the content and the stylistic features.

Key Technical Components:

Style Loss: Computed as the squared error between the Gram matrices of the content image and the style image. This helps align the textures and patterns in the generated image with those of Monet's paintings.

Optimal Transportation Loss: Used to minimize the difference between the pixel intensities of the transformed and original images, ensuring that the core content remains recognizable.

Multi-Model Architecture: All three methods (CycleGAN, Diffusion, and Neural Painting) were compared to assess their effectiveness in preserving content while faithfully applying the Monet style.

Key Features:

Multi-Model Comparison: AI Brush employs three deep learning models to compare and contrast their ability to generate artistic renditions. The system evaluates the transformations through both qualitative (visual inspection) and quantitative (statistical metrics like style loss) methods.

Real-Time Artistic Transformation: Users can interact with the system in real-time, transforming landscape photographs into Monet-style art with high-quality output.

User Surveys for Feedback: The project conducted detailed user surveys, both online and in-person, to assess the aesthetic appeal and authenticity of the AI-generated art. Users were asked to evaluate images based on various factors, including their resemblance to Monet's style, comfort, and overall artistic appeal.

Statistical Analysis: Quantitative analysis was performed using metrics such as style loss and optimal transportation loss. These metrics, alongside qualitative feedback from user surveys, were used to measure the effectiveness of each model in replicating Monet's style.

Outcome:

The project produced valuable insights into the capabilities and limitations of current AI-based style transfer methods. Key findings included:

CycleGAN performed well in preserving content and generating realistic Monet-style transformations but occasionally struggled with fine details, especially in complex compositions.

Diffusion Models provided more control over the structural details of the images, maintaining the integrity of the landscape while applying Monet’s style with higher fidelity.

Stylized Neural Painting achieved high visual coherence, particularly in color harmony and texture replication, though it required fine-tuning to balance style loss and content preservation.

User Survey Results:

The survey involved participants from varied backgrounds, assessing the AI-generated art alongside real Monet paintings. Key results from the survey included:

Aesthetic Appeal: Most users rated the AI-generated art as aesthetically appealing, particularly noting its ability to capture the softness and color dynamics of Monet’s style.

Comfort and Naturalness: Participants reported that the AI-generated images were generally comforting and aligned with the natural beauty depicted in Monet’s works.

Authenticity: While the models generally succeeded in producing Monet-like renditions, users noted that certain subtle characteristics, like brushstroke precision and fine color gradients, could be improved.

The AI Brush system successfully replicated many defining features of Monet’s style, such as loose brushwork and distinct color usage, making it a practical application of AI in the domain of artistic style transfer. The project’s results also uncovered areas where AI-generated art excelled and areas that could benefit from future enhancements, such as finer control over texture and better representation of light.