Overview

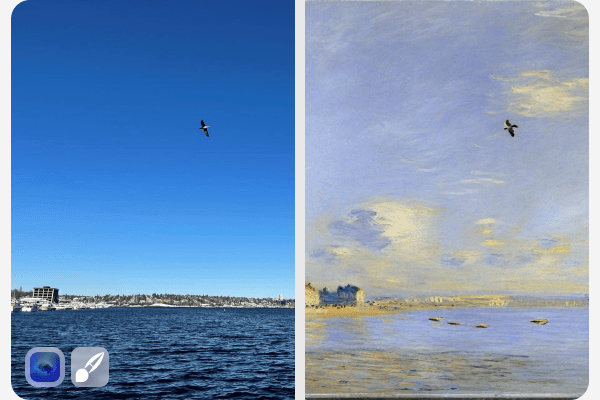

The project aimed to develop a cross-modal emotion recognition system capable of analyzing student engagement through facial expressions and voice tone in educational settings. This system was built to help educators adjust teaching methods in real time based on student emotions and attention levels during online lectures.

Innovative Approach

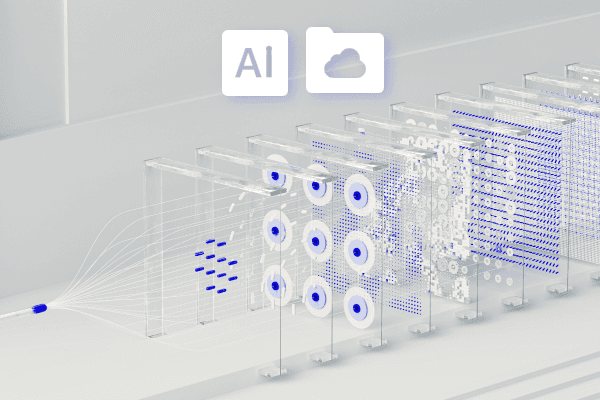

Using a combination of CNNs for facial recognition and RNNs for voice analysis, the system accurately identified various emotional states, such as boredom, confusion, and engagement. These emotional markers were then used to generate insights for teachers, enabling more effective and adaptive instruction.

Facial Recognition: Deployed a CNN model for detecting key facial expressions linked to student emotions.

Voice Tone Analysis: Used an RNN model to analyze speech patterns, recognizing changes in tone, speed, and pitch.

System Features

Real-Time Monitoring: Provided live feedback to educators, enabling immediate adjustments to teaching methods.

Engagement Reports: Generated detailed reports post-lecture to help educators understand student reactions over time.

Impact

This system contributed to a 40% improvement in student engagement, offering an interactive learning experience that responded to students' real-time emotional states.